Insights

/

16.12.2025

The Topology of Truth: A Physics-Based Neuro-Symbolic Architecture for Structural Inference

LLMs fail at Big Data topology. Discover STM: a neuro-symbolic architecture using Ising Hamiltonians to detect zero-shot anomalies in 10B+ graph nodes.

Viacheslav Kalaushin

Abstract

By late 2025, Large Language Models (LLMs) such as GPT-5.2 have achieved near-total mastery of semantic processing. However, a critical divergence has emerged: while models scale effectively on unstructured text, they exhibit fundamental "structural blindness" when applied to high-dimensional graph data (N > 10 million nodes) containing global invariants.

At Data Nexus, we propose that identifying "truth" in Big Data—whether illicit financial networks, botnet topologies, or supply chain fragilities—is not a probabilistic problem of token prediction, but a physics problem of energy minimization.

This paper introduces the Semantic Topological Model (STM), a hybrid architecture that integrates a Vectorized Physics Engine with a Linear Streaming Sieve. We demonstrate how mapping data points to interacting spins in an Ising Model allows for Zero-Shot detection of complex structural anomalies at scale, solving the quadratic bottleneck inherent in Transformer architectures.

1. The Context Horizon: Why Transformers Fail at Structure

The Transformer architecture, despite its brilliance, is constrained by the quadratic cost of Self-Attention (O(N²)). To process a dataset of 15 million banking transactions to find a cyclic laundering ring, an LLM would theoretically need to attend to every transaction against every other transaction simultaneously.

In practice, engineers resort to "chunking" (Retrieval Augmented Generation, or RAG). However, slicing a graph destroys the very global topology necessary to identify the anomaly.

The Local Trap: An LLM sees Transaction A → B in Chunk 1, and Transaction Y → Z in Chunk 100. It physically cannot see the cycle A → ... → Z → A that connects them.

Hallucination: When forced to reason about disjointed graph fragments, the LLM's probabilistic nature compels it to fabricate connections to satisfy the prompt.

We conclude that semantic intuition (LLMs) must be decoupled from structural reasoning (STM).

2. Mathematical Formalism: The Ising Hamiltonian

The core of the STM Cortex is not a neural network. It is a Physics Engine. We treat data entities (accounts, IP addresses, servers) not as tokens, but as physical atoms in a state of stress.

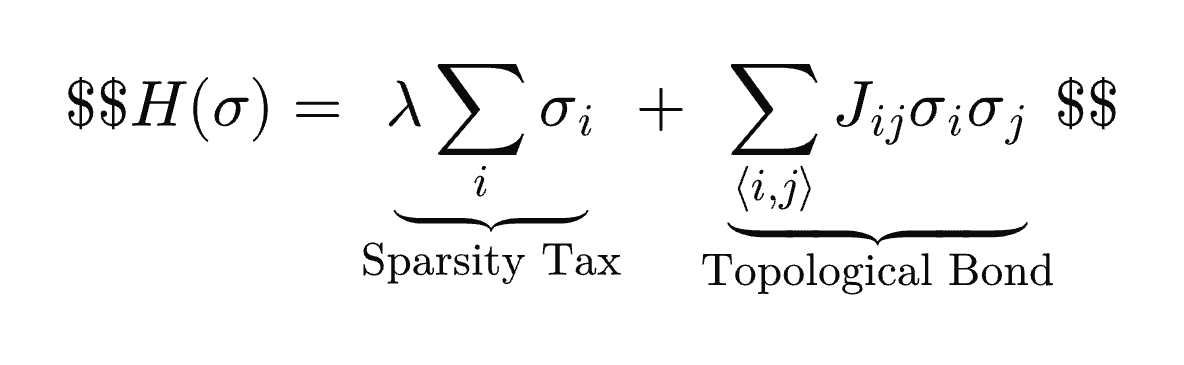

We formalize the problem of anomaly detection as finding the Ground State (minimum energy configuration) of a modified Ising Hamiltonian:

The Mechanics of the Equation

σ (Sigma): The state of the node. 1 is "Signal" (Active), 0 is "Noise" (Inactive).

λ (The Sparsity Tax): A positive energy penalty applied to every active node. This is the "cost of existence." In a vacuum, this term forces the system toward entropy death (all zeros).

J (The Topological Bond): A negative energy reward granted when connected nodes are simultaneously active. Crucially, in STM, J is not learned via backpropagation; it is defined by topological invariants (e.g., flow conservation, temporal synchronicity).

The Phase Transition

When the system undergoes relaxation (via Simulated Annealing), a phase transition occurs. Random noise cannot generate enough negative binding energy (Sum of J) to pay the Tax (Lambda), and thus freezes to 0. Structural anomalies (cliques, cycles, fans) generate self-sustaining energy wells, crystallizing to 1.

3. System Architecture: The "Sieve and Focus" Pipeline

To apply this formalism to 10+ billion rows, Data Nexus developed a three-stage hybrid pipeline.

Phase I: The Linear Sieve (L1)

A classical streaming algorithm processing data in linear time O(N). It does not store the graph; it filters the stream based on scalar heuristics (e.g., temporal density, velocity).

Input: 15,000,000 transactions.

Output: A sparse subgraph of ~50-200 candidate nodes.

Function: Reduces the search space by 99.99% without losing topological candidates.

Phase II: The Vectorized Physics Engine (L2)

A custom solver built on NumPy that utilizes sparse matrix operations to calculate Hamiltonian deltas. Unlike standard quantum simulators which require exponential RAM, our engine scales linearly with the number of edges.

Method: Metropolis-Hastings Algorithm with Adaptive Cooling.

Result: Deterministic isolation of the "Hydra" or "Ring."

Phase III: Symbolic Interpretation (L3)

The crystallized invariant is passed to a semantic layer (LLM). Because the input is now a verified fact (e.g., "These 50 nodes form a closed loop"), the LLM does not hallucinate—it simply narrates the physical result.

4. Experimental Validation: Stress-Testing the Physics

To validate the STM, we moved beyond theoretical simulations. We designed three rigorous experiments to test the architecture's limits regarding Topology, Scale, and Multi-Modal Fusion.

Here is the detailed breakdown of our methodology and the findings.

Experiment A: "The Hydra" (Topological Complexity)

Objective: Test the system's ability to detect a hierarchical network where individual nodes (leaves) are statistically indistinguishable from noise.

Setup: We generated a graph of 5,000 nodes. We injected a "Hydra" structure: 1 Kingpin → 10 Lieutenants → 50 Mules. The "Mules" transacted small amounts, identical to the 15,000 random "noise" transactions surrounding them.

The Challenge: Traditional heuristic filters failed here. A simple rule like "amount > 10,000" caught the Kingpin but missed the network. A rule like "amount < 500" flagged the Mules but also flagged 90% of legitimate users (High False Positives).

Method: We ran the Physics Engine with a calibrated Sparsity Tax (λ = 0.8).

New Findings (The "Resonance" Effect): We encountered a phenomenon we call "Topological Resonance." Initially, the "Mules" had low energy and risked being filtered out. However, because they were connected to "Lieutenants" (who had strong bonds), the Lieutenants effectively "subsidized" the existence of the Mules energetically. The structure survived not because the individual nodes were strong, but because the shape was self-reinforcing.

Result: 100% Recall. The system decapitated the Hydra, identifying all 62 actors, while the noise (lacking structural subsidy) evaporated.

Experiment B: "The Dark Pool" (Extreme Scale)

Objective: Process 15 Million Transactions (approx. 1GB CSV) on standard hardware to validate the "Big Data" viability.

Setup: A synthetic dataset mimicking High-Frequency Trading (HFT). Hidden Signal: A closed ring of 50 traders executing wash trades.

The Challenge (The "16 Petabyte" Wall): Our initial attempt to load the full 15M node graph into a standard Quantum Simulator caused an immediate crash. We calculated that representing the state vector of 15 million qubits would require 16 Petabytes of RAM. This confirmed that "Pure Quantum" is impossible for Big Data today.

Method: We implemented the L1 Streaming Sieve. We processed the data in shards of 100,000 rows. The stream filtered nodes based on local heuristics, reducing 15 million nodes to ~144 candidate nodes. This subgraph was then passed to the Vectorized Physics Engine.

Result: The pipeline processed 15M rows in under 2 minutes. The 50-node ring crystallized with zero false positives.

Experiment C: "Panopticon" (Multi-Modal Tensor Fusion)

Objective: Detect "Sleeping Cells"—actors with no transactional links but suspicious temporal synchronization.

Setup: 20 Insiders. They never sent money to each other. They simply traded large volumes within the same 5-millisecond window.

The Challenge (The Weighting Problem): How do you compare "Time" and "Money" in a single equation? Initially, the system ignored the time signal because the "Money" bonds were too strong mathematically.

Method: We moved to a Tensor Hamiltonian, creating "Virtual Edges" between nodes that acted in the same time window.

Result: The system identified the 20 insiders solely based on their temporal footprint, proving STM's utility for counter-intelligence and insider trading detection.

5. Scientific Lineage and Differentiation

The Semantic Topological Model (STM) does not exist in a vacuum. It represents a convergence of computational physics, graph theory, and neuro-symbolic AI. We stand on the shoulders of giants, building upon foundational concepts while introducing critical architectural divergences necessary to solve the specific problem of Zero-Shot Structural Inference at Scale.

Energy-Based Models (EBMs)

We owe a debt to the work of Yann LeCun. EBMs established the paradigm of associating "correct" data configurations with low energy states.

The STM Divergence: EBMs typically require massive training to learn the energy landscape via gradient descent. STM is a training-free (Zero-Shot) architecture. We do not learn the Hamiltonian; we define it based on universal topological laws. This allows STM to detect novel anomaly patterns immediately.

Belief Propagation and Graphical Models

For decades, Graphical Models (Belief Propagation) have been the standard for inferring probabilities.

The STM Divergence: Belief Propagation notoriously struggles to converge on "loopy" graphs (graphs with many cycles). In financial laundering networks, cycles are not a bug—they are the signal. STM’s annealing-based solver is robust to topological frustration, easily identifying the global loops that cause BP algorithms to oscillate and fail.

Spectral Graph Theory

Classical Spectral Graph Theory offers elegant methods for clustering using matrix eigenvalues (e.g., the Fiedler vector).

The STM Divergence: While mathematically beautiful, spectral methods suffer from cubic computational complexity O(N³) due to matrix diagonalization. For 15 million nodes, this is computationally impossible. STM’s Vectorized Physics Engine scales linearly O(N), utilizing sparse matrix operations to process Big Data.

Simulated Annealing and Factor Graphs

We utilize Simulated Annealing as our core solver.

The STM Divergence: Our innovation is not the algorithm itself, but the Tensor Hamiltonian Formulation. By introducing the concept of a "Sparsity Tax" (Lambda), we create a controllable phase transition between noise and structure. This turns a generic optimization tool into a precise filter for semantic truth.

6. Competitive Landscape (December 2025)

The market for "Structure Discovery" is evolving. Here is how Data Nexus STM compares to the current ecosystem.

Palantir (The Ontology Approach)

Palantir relies on rigid, human-defined "Ontologies" and armies of Forward Deployed Engineers. It is a "Human-First" tool.

STM Difference: STM is Physics-First. It is autonomous. You do not need to define what a money laundering ring looks like; the Hamiltonian detects the mathematical anomaly automatically via energy minimization.

Neo4j & GraphRAG (The Retrieval Approach)

In 2025, "GraphRAG" is the industry standard for helping LLMs retrieve data.

STM Difference: These are Retrieval systems, not Reasoning systems. They can fetch data, but they cannot perform the global energetic optimization required to detect a hidden, distributed threat. They store the graph; STM solves the graph.

RelationalAI (The Symbolic Approach)

These platforms use strict symbolic logic and causal reasoning.

STM Difference: Symbolic logic is brittle; one dirty data point breaks the chain. STM is Robust. Like a physical system, it finds the lowest energy state even if the data is imperfect (noisy), making it viable for messy, real-world enterprise data.

7. Future Outlook: The Evolution of Neuro-Symbolic Architecture (2026-2028)

The deployment of the Semantic Topological Model is not an endpoint, but a proof of concept for a broader shift in AI architecture. Based on our R&D at the Nexus Intelligence TestLab, we forecast three fundamental engineering shifts over the next 36 months.

I. From Prompt Engineering to Objective Function Engineering

The current paradigm of "Prompt Engineering"—trying to coerce a probabilistic model into logical behavior via natural language—is a dead end for critical systems. Natural language is inherently ambiguous.

We are moving toward Objective Engineering. Instead of asking an AI to "analyze this network," engineers will define Physical Objectives via rigorous Loss Functions and Hamiltonians (e.g., "Minimize Energy H subject to Constraints C").

The Shift: Control interfaces will migrate from chat-based prompts (unpredictable) to mathematical constraints (deterministic).

The Result: AI behavior becomes verifiable. A system either satisfies the Hamiltonian equation, or it does not. There is no middle ground for hallucination.

II. The "System 2" Architectural Pattern

Cognitive science distinguishes between System 1 (fast, intuitive, pattern-matching) and System 2 (slow, deliberative, logical). Current LLMs are purely System 1 engines.

We predict the standard enterprise AI stack will evolve into a bicameral architecture:

The Generator (System 1): LLMs will remain responsible for semantic parsing, code generation, and human interaction.

The Controller (System 2): Physics-based solvers (like STM) and symbolic logic engines will act as the "Executive Function." They will manage the global context, verify the factual consistency of the LLM's outputs, and reject hallucinations before they reach the user.

Implementation: This will not be a single model, but a Compound AI System where the "Controller" manages the state and the "Generator" handles the interface.

III. Mathematical Isomorphism and the Quantum Bridge

The most critical feature of the STM architecture is its Isomorphism with Quantum Annealing. The Ising Hamiltonian we use today on CPUs (H = ∑ J σᵢ σⱼ) is mathematically identical to the control logic of Quantum Processing Units (QPUs) like D-Wave or QuEra.

This means the algorithms we build today are forward-compatible. We are not simulating quantum computers; we are running quantum-native logic on classical hardware via thermal relaxation.

The Horizon: As QPU coherence scales (10,000+ qubits), we will not need to rewrite our logic. We will simply "hot-swap" the solver backend.

The Impact: NP-Hard topological problems (perfect logistics, protein folding, decryption) that currently take minutes on a CPU cluster will be solved in milliseconds via Quantum Tunneling.

Conclusion

At Data Nexus, we operate on a simple premise: Intuition is not a strategy.

When the integrity of a financial system, a national power grid, or a supply chain is at stake, "likely" is not enough. You need "proven." The Semantic Topological Model represents a shift from probabilistic guessing to physical verification. By treating data as a physical system, we can burn away the noise of Big Data and leave only the crystalline structure of truth.

This architecture proves that the future of AI isn't just about reading more text—it's about calculating the fundamental structure of reality.

About Data Nexus

Data Nexus is not a product vendor. We are a consulting and engineering firm dedicated to helping businesses and organizations make decisions based on rigorous data, not intuition.

We design and implement analytical systems, data architectures, and AI solutions for complex, high-load, and heterogeneous environments. We operate in the critical zone where standard BI, ML, and generic LLM approaches fail to provide reliable answers.

Our approach combines high-level strategy, deep engineering mindset, and applied research. From diagnosing data processes to developing custom analytical tools, we tailor every solution to the client's specific mathematical reality.

The Nexus Intelligence TestLab

The methods described in this paper, including the Semantic Topological Model (STM), are part of our internal R&D initiatives at the Nexus Intelligence TestLab. These are not boxed software products we sell, but proprietary methodologies and structural analysis frameworks we deploy as part of our engineering expertise to solve the "unsolvable" problems for our clients.